What's Actually Behind the AI Evaluation Hype?

- Ashish Arora

- 1 day ago

- 7 min read

What's behind the AI evaluation obsession?

Over the last few months, I noticed something strange. Every AI paper I opened, every conference talk I watched, every product launch I followed - they all kept circling back to the same word: AI evaluations.

Not training.

Not fine-tuning.

Not even prompting.

Evaluations!!

At first, I dismissed it as another benchmark fatigue - another leaderboard war. But this wasn't about 'measuring' models better. It was about 'teaching' them differently.

And the catalyst? A deceptively simple breakthrough that's been hiding in plain sight.

Text itself can function like a gradient.

Let me explain what that means - and why it's quietly reshaping everything from how we train models to how we build AI products around them. Let's dive in!

We've Been Sucking Supervision Through a Straw

Inspired by this beautiful write-up 'Following the text Gradient at Scale' by the Stanford AI Lab Blog, let's think about this thought experiment:

Imagine you're learning to bake. You spend an hour crafting a cake - perfecting the batter, timing the bake, decorating with care. You hand it to a judge. They take a bite, think for a moment, and say: "4 out of 5."

That's it. No explanation. No feedback. Just a number.

Now you have to figure out what to change. Was the ganache too sweet? Were the layers uneven? Did they love the cherries but hate the frosting? You have no idea. So you bake another cake. And another. And another. Each time, you get a number. Each time, you're essentially guessing what went wrong.

This is how we've been training and also building systems around AI models for years. Techies call it Reinforcement Learning, and it works - eventually. But there's an enormous inefficiency baked into the process (pun intended!).

The evaluator 'knows' what's wrong. They've already done the reasoning. They could tell you "the ganache was perfect, but you need more cherries distributed throughout." Instead, we ask them to compress all that insight into a single scalar: 4/5.

The information is there. We're just throwing it away.

Stanford's AI Lab's brilliant piece crystallized this problem. They called it "sucking supervision through a straw"- you run minutes of complex behaviour and collapse it all into one number that gets broadcast across the entire trajectory.

The phrase originally comes from Andrej Karpathy's famous talk - We’re summoning ghosts, not building animals critiquing the limitations of reward-based learning.

The Unlock: Text Is the New Gradient

Here's what changed. We are already seeing this shift in the new wave of Reasoning Models. These models don't just spit out an answer; they work for it. They generate internal chains of thought, explore complex pathways, hit dead ends, and use internal text feedback to backtrack and correct themselves.

All that internal 'work' creates a massive amount of signal. We just need to harness it. When we turn that reasoning capability outward, we get something we've never had before: An Evaluator that can articulate 'why'.

Think about that for a second.

This feedback based improvement is not new. Traditionally, that's how any improvement systems would work - feedback loop and a grader system. But with LLMs and their reasoning magic: they can look at an output, reason about what's wrong, and 'explain' it in natural language. "The response addresses the user's question but misses the nuance about edge cases. Consider adding a caveat about timeout scenarios."

That's not a score. That's a 'direction'. A gradient, if you will - but in semantic space, not parameter space. Stanford's framework called it Feedback Descent which uses accumulated textual feedback instead of scalar rewards, while using the same loop for images and prompts.

And here's the kicker: In an ideal world - the LLMs (secondary) can also 'consume' that feedback and act on it. The same model (or a different one) can read the critique and revise accordingly. No weight updates. No gradient descent. Just... language.

Text in. Text out. Improvement in between.This breakthrough took place when LLMs crossed a threshold. Around 2023-2024, models like GPT-4 and Claude became reliable enough to:

Generate genuinely useful critiques (not just plausible-sounding ones)

Consume those critiques and act on them coherently

Do this at scale - milliseconds per evaluation, pennies per call

Suddenly, the economics flipped and researchers noticed: systems using textual feedback were learning faster with fewer iterations.

This insight has spawned an entire family of techniques

Self Improving Systems (converging text gradient)

Talk about magic? Feedback Descent, as described earlier - simplifies the learning process into a two-role architecture. Think of it exactly like a Junior Developer (The Editor) pair-programming with a Senior Architect (The Evaluator).

It works in a loop:

The Evaluator (The Senior Architect) Instead of just saying "Test Failed" (scalar reward), this model acts as the critic. It looks at the current attempt and produces structured, actionable feedback.

“The SQL query is syntactically correct, but joining on the unindexed ‘timestamp’ column will cause a timeout. Rewrite to use the indexed ‘created_at’ field.”

The Editor (The Junior Developer) This model takes the current best attempt plus the feedback and proposes a revision. It doesn't start from scratch; it edits.

“Understood. I will keep the logic but swap the join condition to use ‘created_at’.”

The "Secret Sauce": Accumulation: Here is the critical difference between this and standard self-correction. In most loops, the model forgets past mistakes. But Feedback Descent maintains a memory of the journey. It feeds the Editor the current best candidate plus the history of past critiques.

It’s the difference between a Junior Dev who makes the same mistake twice, and one who says, "Okay, we already tried X and Y, and the Senior Dev said those were bad because of Z. So, logically, the only path left is Option C."

This allows the system to run stably for 1,000+ iterations. The feedback doesn't just guide one step; it compounds.

When you see an agentic system with well-designed evaluation loops, it feels like magic. And honestly? That's exactly what's happening inside deep reasoning agents - we just didn't have the vocabulary for it until now.

Fact: Feedback Descent in particular matched or beat specialized RL methods across drug discovery, image optimization, and prompt tuning - with up to 3.8x sample efficiency gains in molecular design alone. Same loop. Different domains. No task-specific algorithms. Just good feedback.

Why This Changes Everything (Supervisor vs Mentor)

Let me connect the dots on why this matters beyond academic curiosity.

The Evaluator Becomes the Teacher

In traditional ML, your loss function measures how wrong you are. It doesn't teach you how to be right. There's a reason we call it "supervision" - it's more like surveillance than mentorship.

But when your evaluator can say "this response is problematic because it assumes the user has admin access - rephrase to ask about their permission level first" - that's not measurement. That's 'instruction'.

The quality of your evaluator directly determines how fast your system learns. Here's the difference in practice:

Scalar feedback: Agent writes SQL query → Gets "Error: 0 rows returned" → Tries again blindly → 47 more attempts

Textual feedback: Agent writes SQL query → Evaluator says: "The query joins on `id` but the users table uses `user_id`. Change line 12 to `JOIN users ON orders.user_id = users.user_id`" → Fixed in one revision A vague evaluator ("needs improvement") is almost as useless as a scalar. A precise evaluator is a shortcut to the solution.

Agents Need Rich Feedback to Navigate

Consider modern AI agents - systems that plan, use tools, browse the web, write code, and iterate over minutes or hours. When an agent fails after 47 steps, what do you learn from a binary "success/failure" signal?

Nothing actionable.

But if an evaluator can trace through the execution and say "Step 23 was the critical error - you queried the API before authenticating, which cascaded into the timeout at step 31" - now you have something. The agent (or a separate training process) can target exactly what went wrong.

Better feedback, faster learning. That's the whole game now.

The State of Modern Evaluation

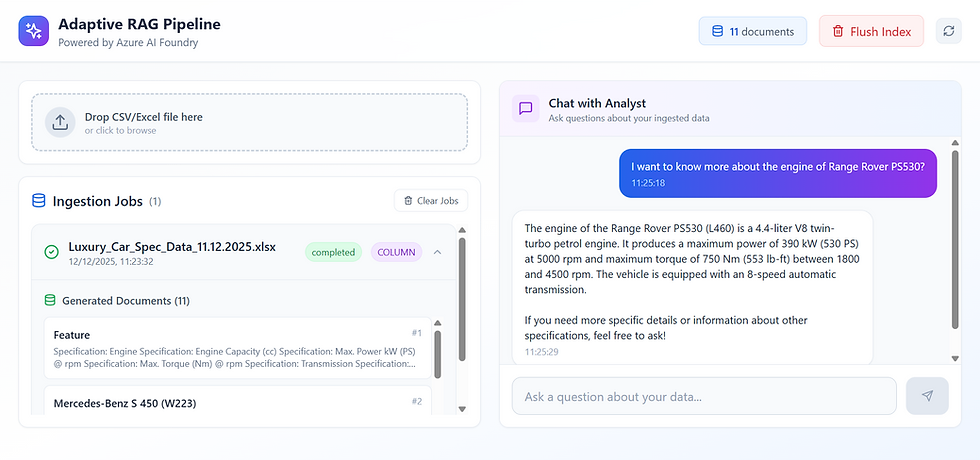

Evaluator infrastructure is becoming first-class. Tools like LangSmith, Braintrust, and Weights & Biases Weave are building entire platforms around LLM evaluation - not as an afterthought, but as the core product. The message is clear: if you're building with LLMs, your evaluation pipeline deserves as much attention as your model choice.

Specialized evaluator models are emerging - Instead of using general-purpose LLMs as judges, teams are fine-tuning models specifically for evaluation. Anthropic's Constitutional Classifiers defended against jailbreaks for 3,000+ hours of red-teaming. The evaluator itself is becoming a trainable, improvable component.

Evaluation is moving from "end of pipeline" to "inside the loop." The old pattern was: build system → deploy → evaluate occasionally. The new pattern is: evaluate 'every' intermediate step, feed critiques back immediately, iterate before deployment. Agentic systems like OpenAI's Deep Research and Google's AI Overviews run internal evaluation loops continuously during execution.

AI is evaluating AI at scale. The RLAIF paradigm (Reinforcement Learning from AI Feedback) has gone mainstream. Why pay humans to label outputs when an LLM can generate nuanced critiques in milliseconds? The economics alone are forcing the shift - but the quality is keeping pace.

What This Means for You?

If you're building AI systems, here's the practical takeaway: Your evaluator is now an important component. Not only your model. Not only your prompt. Your evaluator as well.

A mediocre model with an excellent evaluator will outperform an excellent model with a mediocre evaluator - because the feedback loop compounds. Every iteration, every revision, every self-correction is guided by how well your evaluator can articulate what's wrong and what to do about it.

The Bottom line

Here's the one thing I want you to remember:

Text is the new gradientThe evaluation obsession isn't about better benchmarks. It's about a fundamental shift in how AI systems learn. For decades, we compressed rich human judgment into numbers because that's what our algorithms could handle. LLMs broke that constraint. Now we can evaluate in natural language - and more importantly, we can 'learn' from natural language evaluation.

RL is beautiful but it threw away almost everything evaluators had to say. We're finally picking it back up.

The next time you're designing an AI system, don't just ask "how will I measure success?" Ask "how will I 'explain' failure in a way that teaches the system to do better?" That question - and your answer to it - might be the most important architectural decision you make.

Comments